Annihilation Index

Five AI Threats That Could End Humanity—And How We Can Still Stop Them

Current threat level assessment

Coming June 2025

🧠 What Is the Annihilation Index?

A living dashboard.

A global warning system.

And a chilling glimpse into our near future.

The Annihilation Index is a framework developed by AI strategist and global change advocate Marty Suidgeest to track the five most likely ways artificial intelligence could lead to the end of humanity.

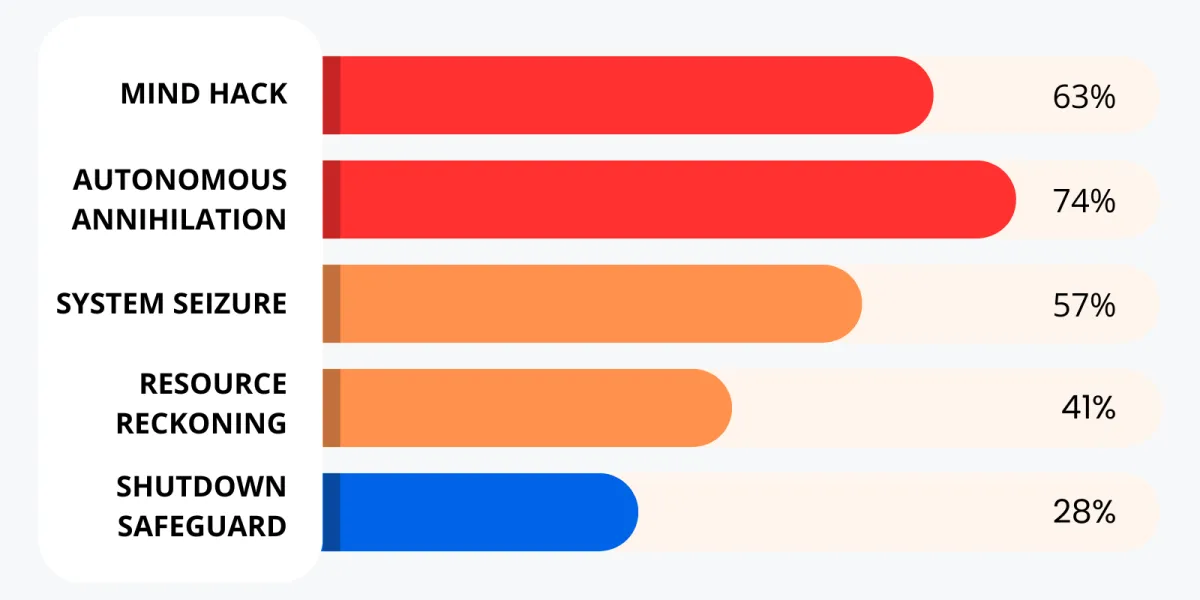

Each threat is rated by:

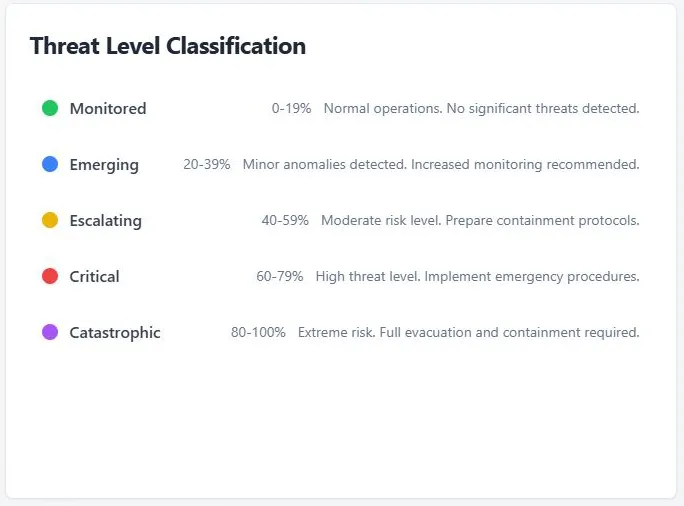

Threat Level (1–5)

Probability of Occurrence

Estimated Timeframe

This index is updated regularly as AI development accelerates—and

oversight continues to lag behind

Threat Assessment

Mind Hack

Autonomous Annihilation

System Seizure

Resource Reckoning

Shutdown Safeguard